MediaPipe Vs TFLite: A Comparison of Pose Estimation Tools

MediaPipe and TensorFlow Lite serve different purposes in the realm of machine learning and computer vision:

MediaPipe:

- Provides a set of pre-built components for ML tasks, making it easier to create applications in computer vision, text, and audio.

- Offers solutions across multiple platforms like Android, Web, Python, and iOS.

- Supports applications such as object detection, image classification, and gesture recognition.

- Does not allow manual quantization of models; only provides quantized models.

TensorFlow Lite (TFLite):

- Specifically designed for running machine learning models on edge devices with resource constraints like mobile devices and embedded systems.

- Allows conversion of custom models to TFLite files for deployment on various devices.

- Supports platforms like Android, Web, Python, iOS, microcontrollers, and Coral edge devices.

- Provides the ability to quantize models based on user preferences.

In summary, if the project involves resource constraints like memory capacity or integer operations support, TensorFlow Lite is recommended. However, if there are no such constraints and ease of use is a priority, MediaPipe can be a suitable choice for developing machine learning applications.

Key Features of MediaPipe and TFLite (TensorFlow Lite):

Feature | MediaPipe | TensorFlow Lite (TFLite) |

Primary Use | Framework for building and deploying multimodal applied ML pipelines for real-time applications, particularly in multimedia content processing (audio, video). | Lightweight solution for running machine learning models on mobile and embedded devices, focusing on inference. |

Technology | Built on top of TensorFlow and C++, offering pre-built ML solutions for tasks like face detection, hand tracking, and pose estimation. | A set of tools that allows the conversion of TensorFlow models into a format optimised for on-device inference. |

Performance | Optimised for real-time applications, especially on mobile and edge devices, with lightweight models and efficient processing pipelines. | Optimised for low-latency, small binary size, and low power consumption, making it suitable for inference on devices with limited computational resources. |

Ease of Use | Provides pre-built models and components, making it easier to integrate complex ML pipelines into applications with minimal setup. | Simplifies the deployment of TensorFlow models on mobile and embedded devices but requires an understanding of TensorFlow for model training and optimisation. |

Community Support | Strong community with extensive documentation, tutorials, and examples focused on MediaPipe’s capabilities and use cases. | Strong community with extensive documentation, tutorials, and tools for optimizing and deploying models on various devices. |

Customisation | Focused on utilising pre-built models and components for specific tasks, with limited customisation of the underlying ML models. | Offers more flexibility in customising and optimising TensorFlow models for on-device inference, including support for custom operators. |

Platforms | Supports deployment on a wide range of platforms including Android, iOS, desktop, and web. | Primarily focused on mobile and embedded platforms, including Android, iOS, and IoT devices. |

Use Cases | Ideal for applications requiring real-time processing of multimedia content, such as augmented reality, gesture recognition, and interactive applications. | Suitable for a wide range of ML applications needing on-device inference, such as image classification, object detection, and natural language processing. |

Need help building an AI project?

At QuickPose, our mission is to build smart Pose Estimation Solutions that elevate your product. Schedule a free consultation with us to discuss your project.

- MediaPipe, by Google, offers basic pose estimation but requires significant user processing.

- QuickPose enhances MediaPipe with pre-built features, simplifying app development.

Effortlessly Integrate Pose Estimation into Your Mobile Apps with QuickPose

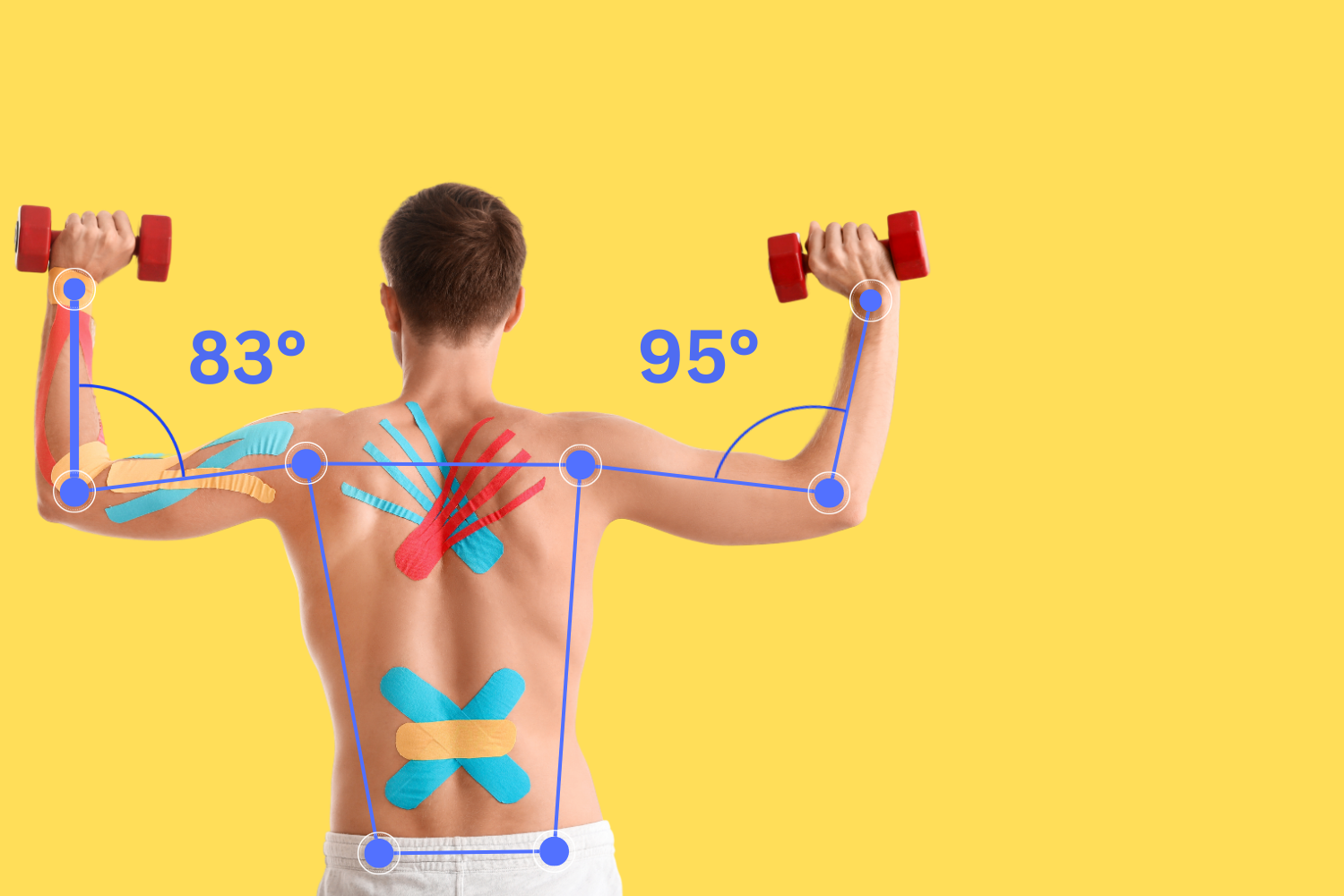

Accurate Pose Estimation

Customisable Output

Fast Processing

Scalable

Pre Built Models

Open-Source Framework

Add our QuickPose iOS SDK into your app in two ways

How QuickPose can be used

Build yourself with our GitHub Repo

Integrate QuickPose using our GitHub Repository and our documentation.

Add QuickPose with our Integration Team

Book a consultation to discuss your use case and capabilities.